Blogg

Här finns tekniska artiklar, presentationer och nyheter om arkitektur och systemutveckling. Håll dig uppdaterad, följ oss på LinkedIn

Här finns tekniska artiklar, presentationer och nyheter om arkitektur och systemutveckling. Håll dig uppdaterad, följ oss på LinkedIn

By the time you are reading this I’m quite confident many of you are already quite weary of reading about the amazing possibilities of AI in general and LLMs in particular. But perhaps you are still, on a general level, positive about AI and happy to use the tools it has provided so far, but quietly asking yourself “What now? What is next?”. This story is for you. Too.

This story, in which the most interesting and relevant events occurred as recently as August 20th, actually began all the way back in May of this year. May 13th to be precise. On this day my friend and colleague Ove Lindström sent out a company wide question asking if anyone was interested in attending a one-day workshop on LLMs and Langchain, organized by JFokus and presented by Marcin Szymaniuck from TantusData. Without knowing anything about Langchain but seeing an opportunity to pick up something new I was quick to sign up. As the day of the workshop came and workshopping commenced it fast became apparent that one day was not enough to understand all the intricacies of the Langchain framework and how it enabled developers to make use of LLMs, but it was enough to tickle my curiosity.

While I’m in no way capable of providing a proper description or summary of everything that Langchain provides, I think I can provide the main high-lights and point the discerning scholar to the Langchain website for further investigation. Langchain provides a common API to work with various LLMs from different providers, notably OpenAI (ChatGPT), Anthropic (Claude), Google (Gemini) and many more.

As the name suggest, Langchain can be used to chain different operations together to effectively use an LLM, such a chain could for example parse an input string to a UserPrompt, send that prompt to an LLM, invoke any number of Langchain tools on the output from the LLM, then call a Langchain retriever on the output from the tools, etc.

We’ll discuss some of these concepts further down. For ease-of-use Langchain also provides its own expression language: LCEL (LangChain Expression Language) that helps keep the code short, concise and altogether neat.

Breezing past the summer months of June and July, August was coming up and with that a new and exciting opportunity, a chance to attend an AI hackathon, organized by Cillers and Monterro, and we are now finally arriving at the main part of the story.

Although the main event of the hackathon was scheduled for August 20, the challenge was to be announced on August 4 when we also had a chance to team up with other attendees if we weren’t already part a team. I did not yet have a team, but was extremely lucky to partner up with two new friends: Jennifer Feenstra-Arengård (frontend) and Fredrik Engberg (full-stack + AI), both highly skilled developers and all-round very nice people!

To build an intelligent scheduling application. Further, for any team or persons who aimed to compete for the hackathon prize: a paid trip to San Francisco(!), it was a prerequisite to use the Cillers technology stack.

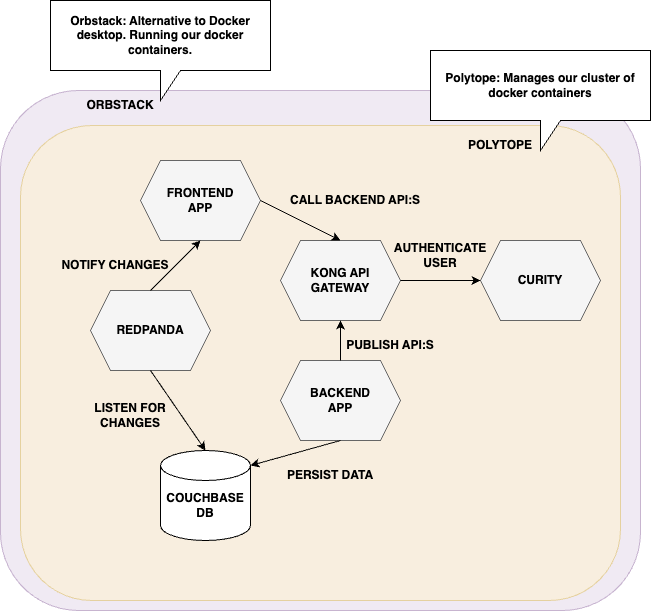

So what was this technology stack? Well, the stack covered both what I would consider infrastructure concerns, i.e. the services or platforms that the application depends on, as well as the software architecture of the components we developed.

Let’s start with the infrastructure.

Alternative to Docker desktop. Worked like a charm, easy to use and according to some voices it is much less resource-gobbling compared to Docker desktop.

Container manager, so something like an alternative to Kubernetes. After dealing with some initial hick-ups, due to unfamiliarity on my part, it performed perfectly. Also, really nice terminal UI.

An event streaming platform that can be used as an alternative to Apache Kafka. Written in C++ it is lightning-fast and light-weight. Definitely something I will try out more thoroughly!

API-Gateway, my colleague Björn Beskow has already written a great blog series about Kong, so please feel free to take a look at that here, but after you have finished reading that don’t forget to return to this blog!

Authentication and authorization server. Developed by a Swedish company that has now expanded internationally.

Very powerful NoSQL DB for handling highly volatile data. Actually the only part of the infrastructure that I at least had heard about, and now very much appreciate.

When it came to the application development and the frameworks involved they were probably more familiar to most developers: React and typescript on the frontend, python with fastAPI running on Uvicorn webserver on the backend. GraphQL as interface between frontend and backend. More familiar perhaps, however this is of course were all the interesting development were to take place.

So, going back to the challenge: develop a smart scheduling application. What did that mean? To answer that question Jennifer, Fredrik and myself met up at the Callista Stockholm office on a sunny Sunday afternoon for some classic whiteboard brain-storming, as the rest of Stockholm strolled around on Drottninggatan a few floors below, naturally oblivious to the high-powered application design taking place above their heads. First question of the day: what should we call our team? After a number of alternatives had been tossed around, discussed and weighted we eventually agreed upon the name “Temporal Titans”. That concluded it was clearly time for a fika break!

After resuming the brain-storming, and now focusing on defining challenge at hand, we concluded that what we would consider the minimum requirements for an intelligent scheduling application, and in all honesty that is just about as much as we would have time to implement for the hackathon, had to include:

Obviously, that would only be the bare minimum (keep in mind, this was a hackathon, not a long development project…), and our wish-list included many more features that should be fairly simple to add once the foundations were in place, such as, but not limited to: a feature to enable staff members to express days or hours when they would not be available for work, the possibility for the customer (or manager) to divide each work day into any number of shifts that could then have different staff allocations, and much, much more.

I shouldn’t come as a surprise these days that when we say “an intelligent … application”, what we really mean is an application for some particular purpose that makes use of either an LLM or generative AI. In our case it would be an LLM, and we thought to make use of this in the first instance for the first feature, the customer onboarding.

I believe all the mayor LLMs have public API:s developers can use to integrate the LLM to their application, but we quickly decided to use Langchain as its documentation and examples are very easy to understand and it is also extremely easy to use in python applications. All you need to do is:

pip install langchain

After that you need to install support for the different LLMs you want to use, e.g.

pip install -qU langchain-openai

For ChatGPT, and finally you need a key to communicate with the LLM. In our case a key was provided by Cillers, the organizers of the hackathon.

Now we could create a chatbot for the onboarding feature to gather the necessary information from the user. But how do we get the chatbot to gather the information we want?

We set this up in a few steps, first we created the chatbot, stating we wanted to use the Open AI model GPT 4.0:

llm = ChatOpenAI(model="gpt-4o", temperature=0.5)

Then we wanted the chatbot to be able to populate our domain object, in our case we called this the BusinessForm object, to complete the organisation onboarding process. To do this, we made use of a Langchain feature called “tools”, special methods that the LLM can call. Below is an example of a tool to set the business name. To make a method into a Langchain tool we need to annotate the method and add a description what the method does. This is picked up by the LLM who can then understand the purpose of the method and call it appropriately.

@tool

def register_business_name(form: Annotated[BusinessForm, InjectedToolArg], business_name: str) -> str:

"""Asks the user to input the business name for it to be stored in the system. This information is mandatory to proceed."""

if len(business_name) >= 5:

form.business_name = business_name

return "Check. Business name has been registered. Ask the user for the days on which the business is open."

else:

return "Business name must be at least 5 characters long. Ask the user to provide a valid business name."

This method, annotated with the “@tool” annotation takes in a form of type BusinessForm on which it operates, and a string business_name. The form object is the form owned by our agent and it requires some python hacking to get it added to the method calls. The second parameter is added by the LLM, who will figure out what part of any message from the user is our business name and pass it to the tool call. The first comment in the method explains to the LLM what the tool is used for and the returned string is used by the LLM to generate a response back to the user.

Finally we want to chain it all together. We do this by creating a list of all the tools we have for the chatbot to use, and then bind those tools to the LLM.

tools = [

register_business_name,

register_business_days,

register_open_hours,

register_number_of_employees_needed,

]

llm_with_tools = llm.bind_tools(tools)

tool_map = {t.name: t for t in tools}

Now, for each interaction with the user we can invoke our tools and in that way populate our domain object.

prompt = ChatPromptTemplate.from_messages(

[

(

"system",

"""You are an efficient agent that assists the user by asking specific... (abbr.)""",

),

MessagesPlaceholder(variable_name="messages"),

]

)

conversation_start: List[AIMessage] = [

AIMessage(content="Hello I am your AI agent, let me help you get started."),

AIMessage(content="What is the name of your business?"),

]

conversation: List[BaseMessage] = copy(self.conversation_start)

form: BusinessForm = BusinessForm()

conversation.append(HumanMessage(content=message))

prompt_instance = prompt.invoke(

{

"messages": conversation,

}

)

agent_response = llm_with_tools.invoke(prompt_instance.messages)

With the AI agent and Langchain tools we were able to complete the onboarding process. Next, we continued with building the data model and integrating the different parts of the application, of which a central part was the week schedule. This schedule should show all the work shifts for all employees over a week. But we needed a tool to generate this week schedule. It was time to implement feature #3.

To generate an optimize week schedule we decided it would be a good idea to use a tool, and Fredrik suggested we used Google OR-tools which turned out to be a great choice for this kind of task. OR-tools enable to developer to define a model on which to operate, in our case our week schedule, and then define a set of constraints to apply to that model, e.g. for each workday in our model we need to have at least x number of employees allocated.

Once the constraints are defined we can tell OR-tools to optimize our model and return optimized result. Sounds like magic, but it actually works! Let’s take a look at some of the code.

model = cp_model.CpModel()

solver = cp_model.CpSolver()

scheduled_template = [[False]*7]*len(self.employee_ids)

Here we have created a constraint model and a solver and a 7 (days in the week) by “number of employees” matrix to hold whether each employee is scheduled for that particular day. Next we add boolean variables to the model that mirrors the scheduled_template matrix.

for ... # Abbreviated nested for loops to iterate over scheduled_template...

scheduled[(emp_id, wd)] = model.new_bool_var(f"scheduled_{emp_id}_{wd}")

Now we have a model on which we can apply constraints. A constraint to ensure that we schedule at least a minimum number employees for each day can look like this.

model.maximize(

sum(

scheduled_template[emp_id_idx][week.index(wd)] * scheduled[(emp, wd)]

for emp_id_idx, emp in enumerate(self.employee_ids)

for wd in self.business_days

)

)

Finally, we can tell the solver to optimize our model based on our constraints.

status = solver.solve(model)

if status == cp_model.OPTIMAL or status == cp_model.FEASIBLE:

for ... # Abbreviated nested for-loops over week days and employees

# If the optimizer sets that an employee should be assigned to that day...

if solver.value(scheduled[emp_id, wd]):

if emp.user_id == emp_id:

# Then we update the employee domain object assigned to that day

emp.wd.is_assigned = True

Of course, all of this was just a small part of the entire smart scheduling application, and even if we got the different parts working we were uncertain if we would have time to connect all the parts until the very end. About an hour or so before the deadline we did manage to get the the whole application to work which I think says a lot about the infrastructure and frameworks we got to use for the hackathon.

First of all, if you have managed to read all the way to the end, a great big thank you! This was a long blog, but I hope it can give some inspiration for further delving into any of these technologies. Personally I am very happy to have been given an opportunity to dig in to Langchain a bit more as well as OR-tools. I can also really recommend testing the Cillers tech stack briefly explained above. And finally, special thanks to my teammates Jennifer Feenstra-Arengård and Fredrik Engberg and also to Per Lange, Peder Linder and the rest of the Cillers team for organising a great hackathon!